Data Lakes – The Apache Spark Ecosystem

Once you have stored data you need to process it. Enters the distributed processing system called Apache Spark. Spark reads and processes data on a cluster of machines and, once processed, writes it back to either a distributed file system, to an object store like S3 or to a NoSQL or cloud-native database.

Did we mention already we love open-source? In this blog we’ll discuss Apache Spark, at this moment probably the fastest cluster computing system for data analytics.

Apache Spark is developed through the open-source community and driven by Databricks which of course has a fully payable service on top of Spark but you’ll quickly discover that each cloud vendor supports Apache Spark clusters.

Standalone vs Cluster

It’s quite easy for instance to spin up a cluster of a couple of machines on Googles DataProc service for free. Which makes it a good candidate to try-out Apache Spark.

It’s also quite easy to install it on a standalone system but keep in mind that the full power of Spark actually comes when using a cluster of machines.

For stand-alone systems you can actually stick with Pandas and don’t need Spark. But for educational purposes it’s of course ok to start with a standalone environment.

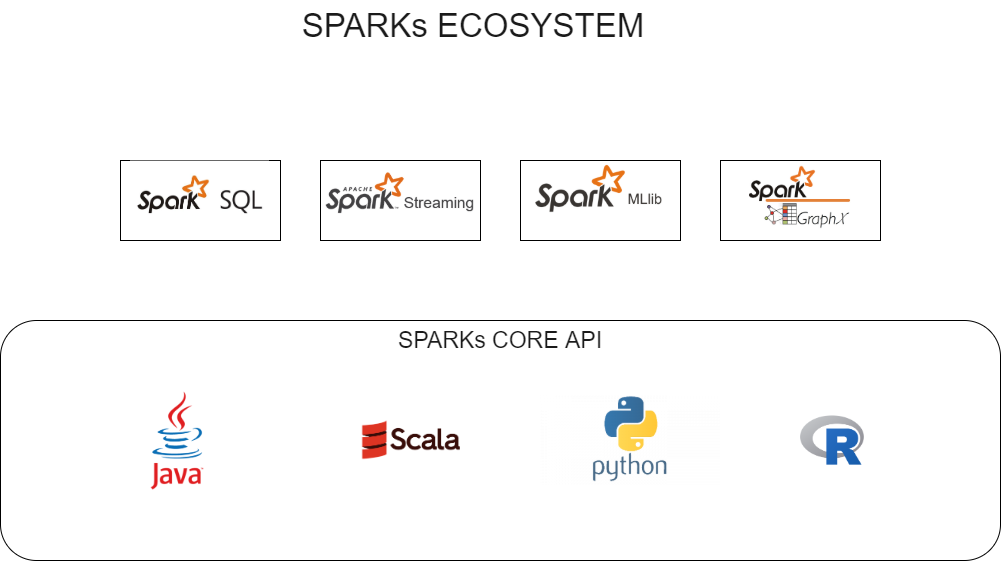

Apache Spark is a fast cluster computing system. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports execution graphs.

The Spark Ecosystem

Apache Spark is actually an eco-system and it exists of:

- Spark SQL for SQL and structured data processing

- Spark Streaming for stream processing

- MLlib for machine learning

- GraphX for graph processing

The Resilient Distributed Dataset (RDD)

One of the core components of Spark is the RDD (Resilient Distributed Dataset). It is the fundamental data structure of Apache Spark. An RDD in Apache Spark is an immutable collection of objects which computes on different nodes of the cluster.

It is

- Resilient, i.e. fault-tolerant with the help of an RDD lineage graph(DAG). Hence it is able to recompute missing or damaged partitions due to node failures

- Distributed, since Data resides on multiple nodes

- Dataset since it represents records of the data you work with. The user can load the data set externally. It can be either JSON file, CSV file, text file or database via JDBC.

Each and every dataset in the RDD is logically partitioned across many servers so that they can be computed on different nodes of the cluster. RDDs are fault tolerant. They deliver self-recovery in the case of failure.

Spark SQL

Spark SQL

The Spark SQL module provides mainly 2 components to the Spark Ecosystem:

- Dataframes

- SQL

A Spark Dataframe is conceptually equivalent to a table in a relational database or a spreadsheet in Excel, but with richer optimizations under the hood.

It is different from an RDD since it can be optimized with a Query Optimization engine we’ll discuss in a future blog. While it’s still possible to program to the lower-level RDD API, in most cases you’ll use the Dataframe API.

You can construct dataframes from a wide array of sources such as structured data files, tables in external databases, etc. Data can also be joined, merged, pivoted etc. with the Spark Dataframe API and this all through a distributed processing engine.

Spark Dataframe vs Pandas Dataframe

The Spark Dataframe API however is, although quite similar, different from the Pandas Dataframe API (Pandas is probably the most popular python library to deal with datasets).

There’s actually an open-source project called Koalas that brings the Pandas API to Spark dataframes. This ensure that data scientists familiar with Pandas can actually use Spark Dataframes without a steep learning curve.

The power of SQL

Spark SQL brings the power of SQL to Spark Dataframes. By using simple SQL statements, you can do almost anything that you would do through the Spark Dataframes API.

This brings an additional advantage. Not everyone needs to be an expert Python, R, Java or Scala programmer. Through some simple statements you almost immediately can start using SQL to start transforming datasets through Spark.

This also allows to decrease the learning curve in the organization to start working with Spark.

Although we must give a little warning. Creating optimized Spark SQL data pipelines, whether its done programmatically or with a bunch of SQL code, is not a trivial task. It requires some expertise to be able to build fast data pipelines.

Actually, it requires that you understand how several operations will have an impact on the cluster performance, how sharded data will be shuffled and how this can be optimized for performance.

It also helps if you understand how Spark SQL is optimized. We’ll discuss this topic later in a future blog.

Spark Streaming

Spark Streaming

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams.

Data can be ingested from many data streaming sources like for instance Apache Kafka or Amazon Kinesis and can be processed using complex algorithms.

Finally, processed data can be pushed out to filesystems, databases, and live dashboards.

And since Apache Spark is an ecosystem, you actually can apply Spark’s machine learning and graph processing algorithms on these streaming dataframes.

Spark MLLib

Spark MLLib

MLlib is Spark’s machine learning (ML) library. It makes practical machine learning scalable and easy. At a high level, it provides tools such as:

- ML Algorithms: common learning algorithms such as classification, regression, clustering, and collaborative filtering

- Featurization: feature extraction, transformation, dimensionality reduction, and selection, all through the same Dataframe API as discussed before

- Pipelines: tools for constructing, evaluating, and tuning ML Pipelines. Through pipelines features will be extracted from datasets, feature transformations will be done on dataframes, training will be executed and final model results will be generated

- Persistence: saving and load algorithms, models, and Pipelines

- Utilities: linear algebra, statistics, data handling, etc.

In addition also images can be loaded into Spark Dataframes, hence opening up a lot of possibilities in creating ML pipelines for deep learning systems on image classification problems.

Moreover, Spark can be used also in combination with Keras, Tensorflow and other deep learning frameworks, leveraging hence distributed processing for these frameworks.

Spark GraphX

Spark GraphX

GraphX is a component in Spark for graphs and graph-parallel computation. At a high level, GraphX introduces a new Graph abstraction: a directed multigraph with properties attached to each vertex and edge.

To support graph computation, GraphX exposes a set of fundamental operators (e.g., subgraph, joinVertices, and aggregateMessages) as well as an optimized variant of the Pregel API (originally developed by Google).

In addition, GraphX includes a growing collection of graph algorithms and builders to simplify graph analytics tasks.

Key take-away:

Apply Apache Spark for distributed computing in your data lake. It can be a cornerstone of your data transformation pipelines, ML training pipelines, graph processing pipelines and streaming pipelines.

This blog is part of our Data Lake Series: Data Lake – Reference Architecture

Want to know more?

Get in Touch

Originally I started my career as an expert in OO design and development.

I shifted more than 15 years ago to data warehousing and business intelligence and specialised in big data and data science.

My main interests are in deep learning and big data technologies.

My mission: store, process and deliver data fast, provide insights in data, design ML models and apply them to smart devices.

In my spare time I’m very passionate about Salsa dancing. So much that I performed internationally and that I have my own dance school where I teach LA style salsa.