Data Lakes – Amazon S3

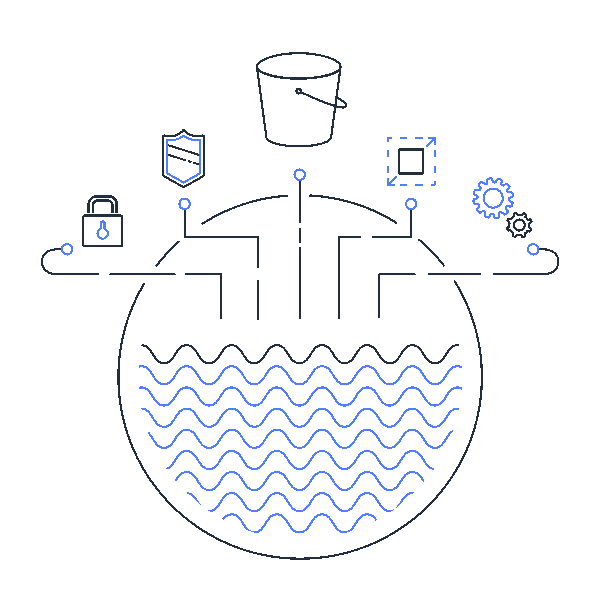

The first requirement for any data lake is to ensure it can store the raw data that we want to use for data analytics. And in any format, whether it’s structured or unstructured data. We potentially want to store flat files, images, audio files etc.

Amazon’s S3 Object Storage is ideal for such storage. It’s not really a distributed file system but more an infinite object store. As an alternative you could use a distributed file system or an object storage provided by another vendor like Google.

Since Amazon’s S3 is infinite, you can store whatever you want in there and how much you want. Most big data platforms like Cloudera, Hortonworks, Databricks, Amazon’s EMR and Googles DataProc support S3.

Durable Data Storage

Durable Data Storage

The Amazon S3 service automatically creates and stores copies of all uploaded S3 objects across multiple regional zones. This means your data is available when needed and protected against failures, errors, and threats.

Multiple Storage Classes

Multiple Storage Classes

With Amazon S3 you can store data across a range of different S3 Storage Classes. Each storage class has different characteristics. Do you need your data for fast access or can the data be stored for archiving purposes etc.

Data is stored as objects within resources called “buckets”. A single object can be up to 5 terabytes in size. S3 features include capabilities to append metadata tags to objects, move and store data across the S3 Storage Classes, configure and enforce data access controls, secure data against unauthorized users, etc.

Scalable

Scalable

One can instantly scale up storage capacity.

Secure your data

Secure your data

Access management is provided through multiple services:

- AWS Identity and Access Management (IAM) to create users

- Access Control Lists (ACLs) to make individual objects accessible to authorized users

- bucket policies to configure permissions for all objects within a single S3 bucket

- S3 Access Points to simplify managing data access to shared data sets by creating access points with names and permissions specific to each application or sets of applications

Amazon S3 also supports Audit Logs that list the requests made against your S3 resources for complete visibility into who is accessing what data.

Amazon S3 supports both server-side encryption and client-side encryption for data uploads.

Data Transfer

To transfer your data to S3 buckets there are multiple options amongst which:

- The AWS Storage Gateway: applications connect to the service through a virtual machine or hardware gateway appliance using standard storage protocols, such as NFS, SMB and iSCSI.

- AWS DataSync and the AWS Transfer Family to transfer files to S3 via FTP, SFTP

- APIs like the AWS SDK or community APIs (e.g. S3 python API) to programmatically transfer data from custom built micro services to S3 buckets

- Native integrations with third-party service providers (see ANS) like ETL tools, big data platforms etc.

Conclusion

The key take-away for your Data Lake architecture:

Amazon S3 storage service is a strong basis for your raw data storage requirements. At sAInce.io it is our preferred choice as the fundament of a data lake.

A valid alternative is a secure distributed file system like the DBFS (Databricks file system). Databricks is the driving force behind Apache Spark and one of the fast growing big data platform providers out there. They are also the driving force behind Delta Lake and MLFlow

This blog is part of our Data Lake series: Data Lake – Reference Architecture

Related tutorials:

Want to know more?

Get in Touch

Originally I started my career as an expert in OO design and development.

I shifted more than 15 years ago to data warehousing and business intelligence and specialised in big data and data science.

My main interests are in deep learning and big data technologies.

My mission: store, process and deliver data fast, provide insights in data, design ML models and apply them to smart devices.

In my spare time I’m very passionate about Salsa dancing. So much that I performed internationally and that I have my own dance school where I teach LA style salsa.